[ Hybrid Cloud for Storage ]

AWS S3 와 on-premise 스토리지를 함께 사용하는 방식, AWS Storage를 사용하여 on-premise와 AWS Storage간 연동이 가능

- AWS is pushing for "hybrid cloud"

Part of your infrastructure is on the cloud

Part of your infrastructure is on-premise(실제보유)

- This can be due to

1) Long cloud migrations

2) Security requirements

3) Compliance requirements

4) IT strategy

- S3 is a proprietary storage technology (unlike EFS/NFS), sho how do you expose the S3 data on-premise?

: AWS Storage Gateway

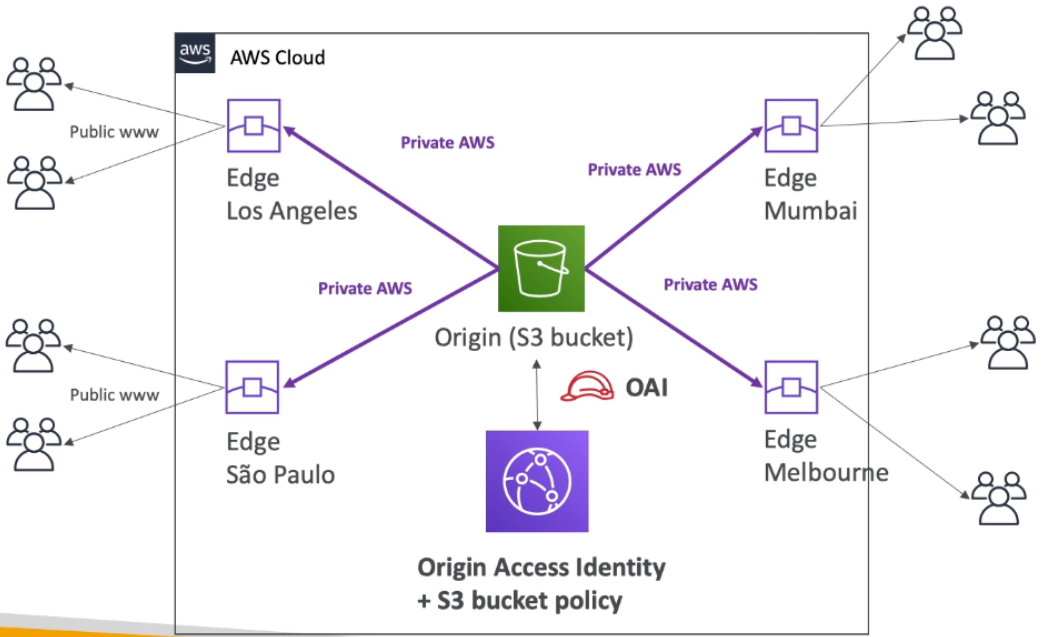

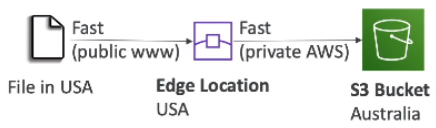

[ AWS Storage Gateway ]

Bridge between on-premise data and cloud data in S3

ex) DR, backup & restore, tiered storage

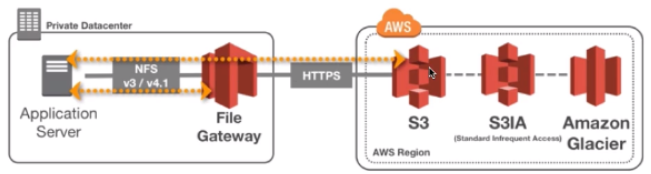

1. File Gateway

- Configured S3 buckets are accessible using the NFS and SMB protocol

- Supports S3 standard, S3 IA, S3 One Zone IA

- Bucket access using IAM roles for each File Gateway

- Most recently used data is cached in the file gateway

- can be mounted on many servers

- backed by S3

1-2. File Gateway - Hardware appliance

- Using a file gateway means you need virtualization capability

Otherwise, you can use a File Gateway Hardware Appliance

- You can buy it on amazon.com

- helpful for daily NFS backups in small data centers

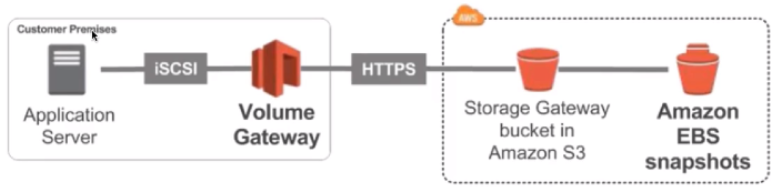

2. Volume Gateway

- Block storage using iSCSI protocol backed by S3

- Backed by EBS snapshots which can help restore on-premise volumes

Cached volumes: low latency access to most recent data

Stored volumes: entire dataset is on premise, scheduled backups to S3

- backed by S3 with EBS snapshots

3. Tape Gateway

- Some companies have backup processes using physical tapes

- with tape gateway, companies use the same processes but in the cloud

- Virtual Tape Library (VTL) backed by Amazon S3 and Glacier

- Back up data using existing tape-based processes (and iSCSI interface)

- Works with leading backup software vendors

- backed by S3 and Glacier

[ Amazon FSx for Windows ]

Linux 에서만 사용가능한 EFS 를 보완하기 위해 나온 Window 용 EFS

- EFS is a shared POSIX system for Linux systems

- FSx for Windows is a fully managed Windows file system share drive

- Supports SMB protocol & Windows NTFS

- Microsoft Active Directory integration, ACLs, user quotas

- Built on SSD, scale up to 10s of GB/s, millions of IOPS, 100s PB of data

- Can be accessed from your on-premise infrastructure

- Can be configured to be Multi-AZ

- Data is backed-up daily to S3

[ Amazon FSx for Lustre ]

Clustering 된 Linux. 분산 파일 시스템, 머신러닝 등 높은 퍼포먼스 지원

- The name Lustre is derived from "Linux" and "cluster"

- Lustre is a type of perallel distributed file system, for large-scale computing

- Machine Learning, High Performance Computing (HPC)

- Video Processing, Financial Modeling, Electronic Design Automation

- Scales up to 100s GB/s, millions of IOPS, sub-ms latencies

- Seamless integration with S3

Can "read S3" as a file system (through FSx)

Can write the output of the computations back to S3 (through FSx)

- Can be used from on-premise servers

# Storage Comparsion

- S3 : Object Storage

- Glacier : Object Archival

- EFS : Network File System for many Linux instances, POSIX filesystem

- EBS Volumes : Network storage for one EC2 instance at a time

- FSx for Windows : Network File System for Windows servers

- FSx for Lustre : High Performance Computing Linux file system

- Instance Storage : Physical storage for your EC2 instance (high IOPS)

- Storage Gateway : File Gateway, Volume Gateway (cache & stored), Tape Gateway

- Snowball / Snowmobile : to move large amount of data to the cloud, physically

- Database : for specific workloads, usaully with indexing and querying

'infra & cloud > AWS' 카테고리의 다른 글

| [AWS] 12-2. Decoupling application: SNS, SNS+SQS (Fan Out) (0) | 2021.04.14 |

|---|---|

| [AWS] 12-1. Decoupling application: SQS (0) | 2021.04.13 |

| [AWS] 11. AWS Storage Extras : Snowball (0) | 2021.04.12 |

| [AWS] 10-2. CloudFront Signed URL / Cookies, Global Accelerator (0) | 2021.04.11 |

| [AWS] 10-1. AWS CloudFront (0) | 2021.04.11 |