[ S3 Performance ]

오토스케일링이 되며, request 는 prefix 마다 받을 수 있는 양이 있으므로 prefix 를 늘려 성능을 향상시킬 수 있음

- Amazon S3 automatically scales to high request rates, latency 100-200ms

- Your application can achieve at lease 3500 PUT/COPY/POST/DELETE and 5500 GET/HEAD requests per second per prefix in a bucket

- There are no limits to the number of prefixes in a bucket

- prefix Example (object path -> prefix) :

1) bucket/forder1/sub1/file -> prefix : /folder1/sub1/

2) bucket/forder1/sub2/file -> prefix : /folder1/sub2/

3) bucket/1/file -> prefix : /2/

4) bucket/2/file -> prefix : /2/

* If you spread reads across all four prefixes evenly, you can achieve 22000 requests per second for GET and HEAD

[ S3 KMS Limitation ]

SSE-KMS encryption 사용시 KMS 암복호화로 인해 성능에 문제가 생길 수 있음

- If you use SSE-KMS, you may be impacted(영향받는) by the KMS limits

- When you upload, it calls the GenerateDataKey KMS API

- When you download, it calls the Decrypt KMS API

- Count towards the KMS quota per second (5500, 10000, 30000 req/s based on region)

- As of today, you cannot request a quota increase for KMS

[ S3 Performance ]

1 UPLOAD

1) Multi-part upload

- recommended for files > 100MB

- must use for files > 5GB

- Can help parallelize uploads (speed up transfers)

2) S3 Transfer Acceleration (upload only)

- Increase transfer speed by transferring file to an AWS edge location which will forward the data to the S3 bucket in the target region

- Compatible with multi-part upload

2 DOWNLOAD :

1) S3 Byte-Range Fetches

- Paralleize GETs by requesting specific byte ranges

- Better resilience in case of failures

- Can be used to speed up downloads

- Can be used to retrieve only partial data (for example the head of a file)

[ S3 Select & Glacier Seletct ]

S3 서버사이드 필터링으로 고성능

- Retrieve less data using SQL by performing server side filtering

- Can filter by rows & columns (simple SQL statements)

- Less network transfer, less CPU cost client-side

[ S3 Event Notifications ]

S3 이벤트 발생시 SNS, SQS, Lambda function 등의 노티를 받을 수 있음

bucket versioning 을 활성화 시켜야 함

- ObjectCreated, ObjectRemoved, ObjectRestore, Replication...

- Object name filtering possible (ex: *.jpg)

ex: generate thumbnails of images uploaded to S3

- Can create as many "S3 events" as desired

- can email/notification, add message into a queue, call Lambda Functions to generate some custom code

- S3 event notifications typically deliver events in seconds but can sometimes take a minute or longer

- If two writes are made to a single non-versioned object at the same time, it is possible that only a single event notification will be sent

- If you want to ensure that an event notification is sent for every successful write, you should enable versioning on you bucket

[ AWS Athena ]

S3 Bucket 에 file 을 두고 sql 로 직접 조회/분석이 가능

Serverless service to perform analytics directly against S3 files

- Uses SQL language to query the files

- Has a JDBC/ODBC driver

- Charged per query and amount of data scanned

- Supports CSV, JSON, ORC, Avro, and Parquet (built on Presto)

Use cases: Business intelligence/analytics/reporting, analyze & query VPC Flow Logs, ELB Logs, CloudTrail trails...

* to Analyze data directly on S3, use Athena

[ S3 Object Lock & Glacier Vault Lock ]

1) S3 Object Lock : 정해진 시간동안 LOCK

Adopt a WORM (Write Once Read Many) model

Block an object version deletion for a specified amount of time

2) Glacier Vault Lock : 한번 설정시 파일 수정/삭제 절대불가

Adopt a WORM model

Lock the policy for future edits (can no longer be changed)

Helpful for compliance and data retention(보유)

'infra & cloud > AWS' 카테고리의 다른 글

| [AWS] 10-2. CloudFront Signed URL / Cookies, Global Accelerator (0) | 2021.04.11 |

|---|---|

| [AWS] 10-1. AWS CloudFront (0) | 2021.04.11 |

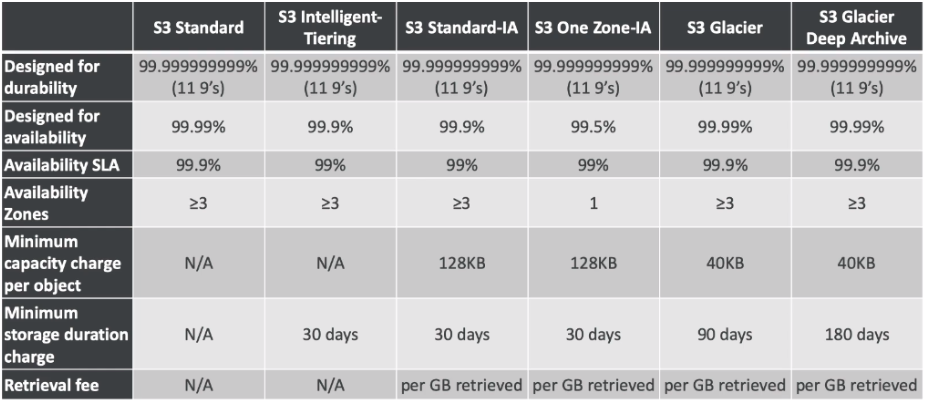

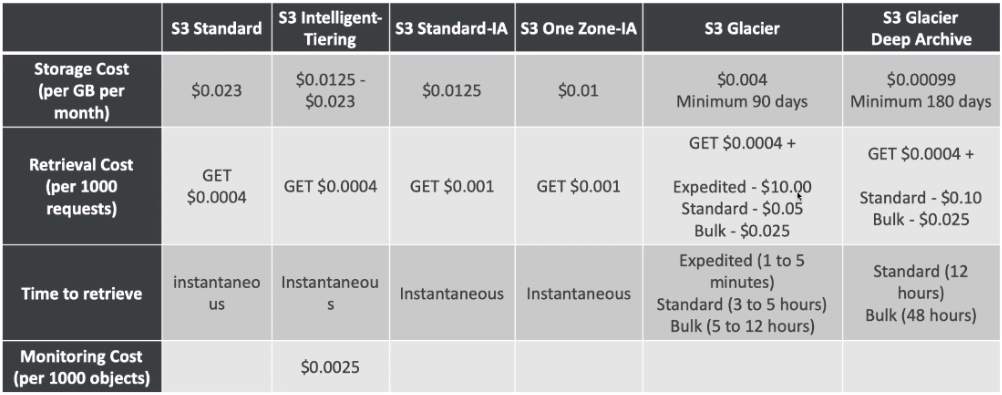

| [AWS] 9-3. Storage Classes + Glacier (0) | 2021.04.06 |

| [AWS] 9-2. S3 Access Logs, S3 Replication (0) | 2021.04.04 |

| [AWS] 9-1. S3 MFA Delete (0) | 2021.04.03 |